Fiddler, Excel, and "Good Enough"

My family's dog of 10 years, Neko, unfortunately passed away a couple of months ago. My parents decided that now was a good time to start looking for another beautiful dog to bring into their life, but were having some trouble finding a dog that fit their requirements. Neko, and our dog before that, Macy, were both black British Labrador females. More than that, they actually came from the same breeder, some 14 odd years apart. Unfortunately the breeder didn't have any available, and so my dad went out to get a list of breeders that he could contact. So he shot me an email.

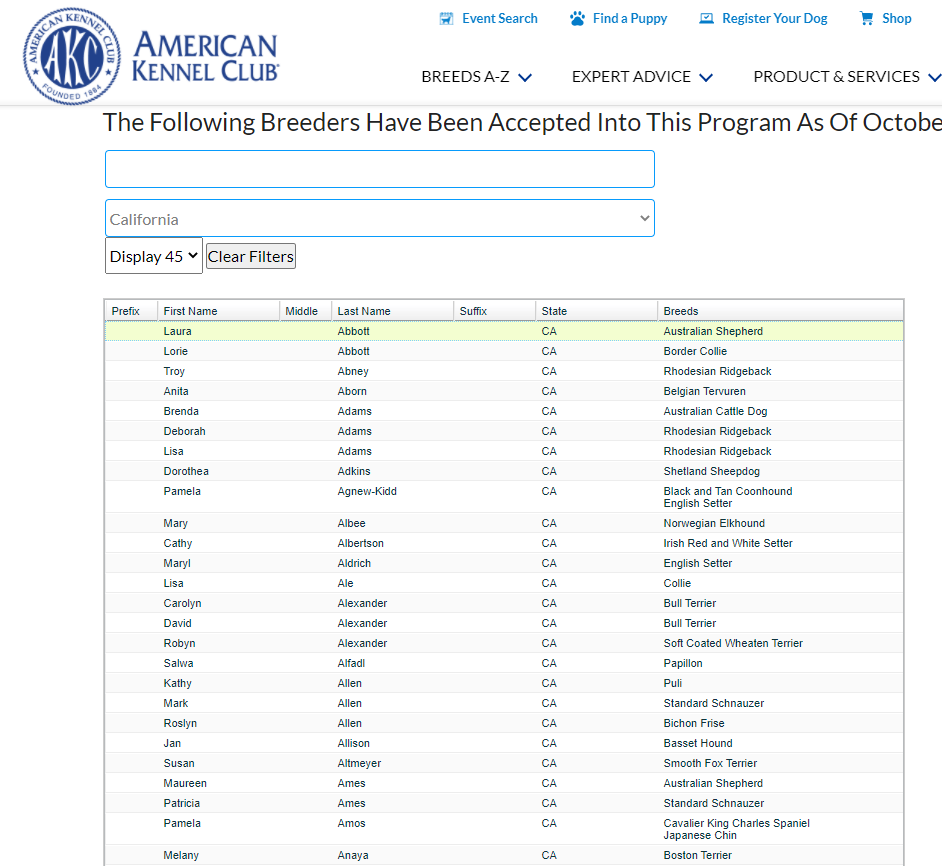

The American Kennel Club (or AKC for short) has a "Breeder of Merit" program. If you're looking to get a dog, I would highly recommend looking at your local shelter dogs as your first priority, but my parents had something pretty specific in mind. The issue is that the list of breeder's forces you to select a single state, can't sort by breed, and only lets you see 45 at a time.

There are a couple of elements that immediately make me think that we can work around this. First, the website has a cfm extension, which means that they're using ColdFusion, and usually means that it's a DIY website with not a ton of backend sophistication. Secondly, the 45 restriction is unlikely to be something that's required by the server, and more just a client-side restriction. Fiddler is the perfect tool for something like this.

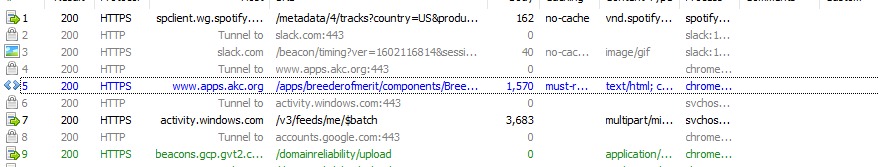

Fiddler is a debugging proxy tool for logging, inspecting, and altering HTTP and HTTPS traffic. Basically when you do something on a website, a request goes from your computer to the server that powers the website. Fiddler sits in-between that connection, and lets you see what goes out and what comes in. Tools like this are very dangerous when an attacker is using them (this is a "Man In The Middle" or MITM attack) to nab your bank account details on the way to logging in, but are very useful when you're using it yourself. We can fire up Fiddler, and fire off the search again, making sure to look for the blue "POST" request.

There's one that's making a request to "apps/breederofmerit/components/BreederOfMerit.cfc", and that looks right up our alley. Clicking over to Inspect the traffic, we can see that it has a list of parameters that it's passing to the backend.

{"page":1,"pageSize":45,"gridsortcolumn":"","gridsortdirection":"ASC","sid":"ecom","cde_state_province":"CA","filter":""}

We can also take a look at the raw response that's being passed back by the server.

{"QUERY":{"COLUMNS":["BREEDER_NAME","CDE_STATE_PROVINCE","TEXT_NAME_LAST_OR_OTHER","TEXT_NAME_FIRST","CUSTOMER_NUMBER","BREEDS"],"DATA":[["Laura A","CA","A","Laura","#...","Australian Shepherd"],...},"TOTALROWCOUNT":"1604"}

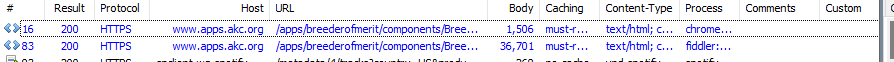

I ...'d some of the information out, but you can see roughly what's being returned. It also tells you the total row count, which is super helpful for this case. Alright, so now we can kind of see how the "Fiddler" bit of the title comes in, but what about the "Excel" and "Good Enough" bits? At this point, we have a request that we can tweak slightly and send again. We could increment the page number and get the second page of results. We could try and sort on the breed, and only return the top ones because "British Labrador" is pretty close to the top. We could try and figure out how the filter parameter works, and use that to search for Labrador directly. We also want states other than California (we're looking at a multi-state radius including CA, OR, NV, AZ, WA, and UT), so we could spend some time trying to figure out how to expand the state search. These are all possible approaches, but I don't care about an elegant solution, I just want the data. So let's just set the page size to 1604. And it works like a charm.

First one has the 45 rows of data, the second one has all 1604. Rather than process them in Python or anything, a simple regex (replacing \[(.*?)\], with \1\n) gets it into a format that can be read as a CSV file. Rather than coming up with some clever way to handle the multiple states, I just did it 6 times for each state. I took all of it, dumped it in one large Excel document, and filtered by breed. Voila! From start to finish under 15 minutes, and something that would have taken ages to do by hand.

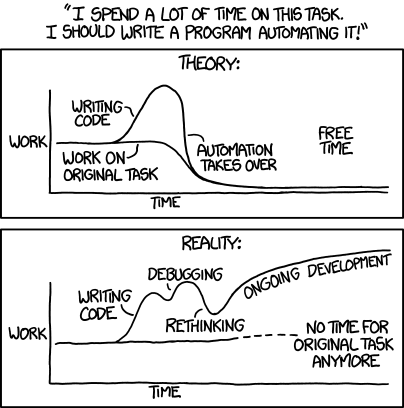

The moral of the story is twofold:

- If a website is doing it, so can you just by mimicking the request. You'd be surprised at what you can get away with when people expect that the only thing being sent up is from their own code.

- If you're doing something once, the slightly more manual hacky way usually is a better use of your time.